- This topic is empty.

-

Topic

-

Stable Diffusion is a deep learning, text-to-image model, mostly used to generate detailed images from text descriptions. It is a latent diffusion model (generative models are a class of machine learning models that can generate new data based on training data), a deep generative neural network developed by the CompVis group (LMU Munich). Stable Diffusion can run on most consumer hardware that has a modest GPU and at least 8 GB VRAM. Moving away from from previous proprietary text-to-image models that were accessible only via cloud services like DALL-E.

The Stable Diffusion model supports the ability to generate new images from nothing through the use of a text prompt (you can describe what to be included or left out from the final output). Existing images can be re-drawn to add new elements described by a text prompt ( “guided image synthesis”). The model also allows the use of prompts to partially alter existing images via inpainting (process where damaged, deteriorated, or missing parts of an artwork are filled in to present a complete image) and outpainting (an editing tool within DALL-E 2 that lets you expand your creation beyond the border of the image).

It can create high quality images of anything you can imagine in seconds – just type in a text prompt and hit Generate. GPU enabled and fast generation – Perfect for running a quick sentence through the model and get results back rapidly. They don’t collect and use any personal information, neither store your text or image. And, there are no limitations on what you can enter.

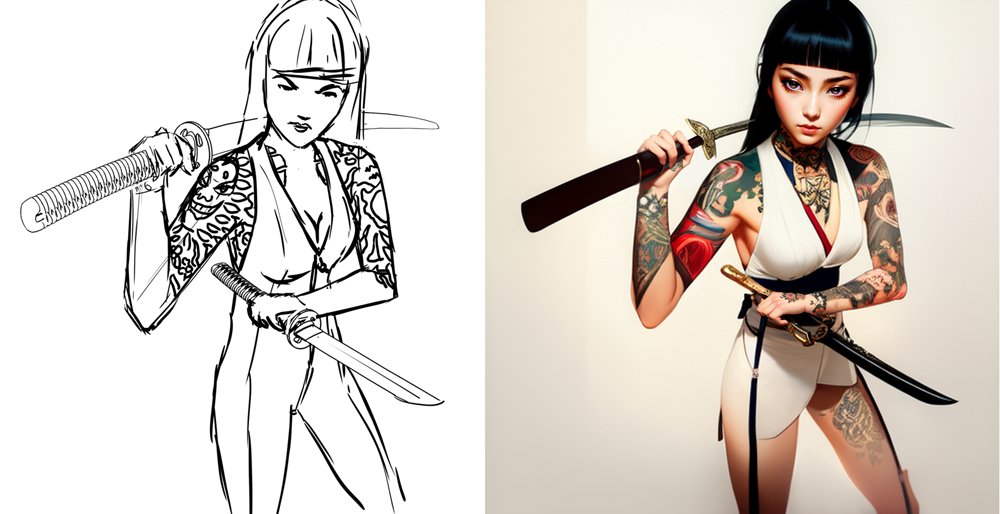

ControlNet is a neural network structure designed to control diffusion models by adding extra conditions. It brings some serious control to Stable Diffusion – you upload a picture, type a prompt and you get your new desired picture with the prompts you specified. The revolutionary thing about this is its solution to the problem of spatial consistency. Previously there was no real way to tell an AI model which parts of an input image to keep, ControlNet changes this by introducing a method to enable these models to use more input conditions that tell the AI model exactly what to do. The main dataset for Stable Diffusion was the 2b English language label subset of LAION 5b, a general crawl of the internet created by the German charity LAION. All Images created through Stable Diffusion are fully open source, falling under the CC0 1.0 Universal Public Domain Dedication.

Stable Diffusion have a photoshop plugin, where you can use the AI to generate and edit images. You just select a part of an image, type in what you want in that part of an image and using Stable Diffusion it generates what you request and puts it in your image. You can add rivers, dragons, castles, burgers and whatever else you desire to images. It is coming to Figma, Canva and more soon.

https://exchange.adobe.com/apps/cc/114117da/stable-diffusion

- You must be logged in to reply to this topic.